All non-human traffic that accesses a site is referred to as bot traffic.

Your website will eventually receive visits from a specific volume of bots, whether a well-known news website or a small-scale, recently launched company.

Bot traffic is often interpreted as intrinsically destructive; however, that isn’t always true.

Without a doubt, specific bot behavior is intended to be hostile and can harm data.

Web crawlers can sometimes be used for data scraping, DDoS (distributed denial of service) attacks, or credential stuffing.

Proven strategies for identifying and removing bot traffic

Web experts can examine direct network access requests to websites to spot potential bot traffic.

The detection of bot traffic also can be aided by a built-in web analytics tool. However, first, let’s look at some crucial information regarding bots before we go over the abnormalities, which are the distinguishing features of bot activity.

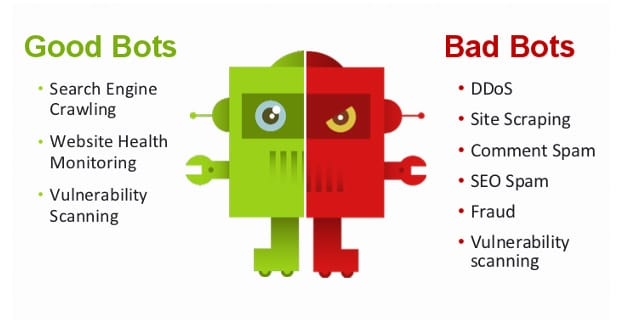

What is good bot traffic?

The below bots are trustworthy and exist to offer beneficial answers for apps and websites

1. Bots for search engines

The most apparent and popular good bots are web search bots. These bots crawl online and assist site owners in getting their websites displayed in Bing, Google, and Yahoo search results. They are helpful tools for SEO (search engine optimization).

2. Monitoring bots

Publishers can ensure their site is secure, usable, and performing at its best by monitoring bots. They check if a website is still accessible by periodically pinging it.

These bots benefit site owners since they instantly notify the publishers if something malfunctions or the website goes down.

3. SEO crawlers

SEO crawlers use algorithms to retrieve and analyze a website and its rivals to give information and metrics on page clicks, visitors, and text.

Afterward, web administrators can utilize these insights to design their content for increased organic search performance and referral flow.

4. Copyright bots

To ensure that nobody is unauthorizedly using copyrighted material, copyright bots search online for photos protected by law.

What is defined as bad bot traffic?

Contrary to the beneficial bots we previously discussed, harmful bot activity can affect your site and do substantial damage when left unchecked.

The results can range from delivering spam or misleading visitors to more disruptive things, like ad fraud.

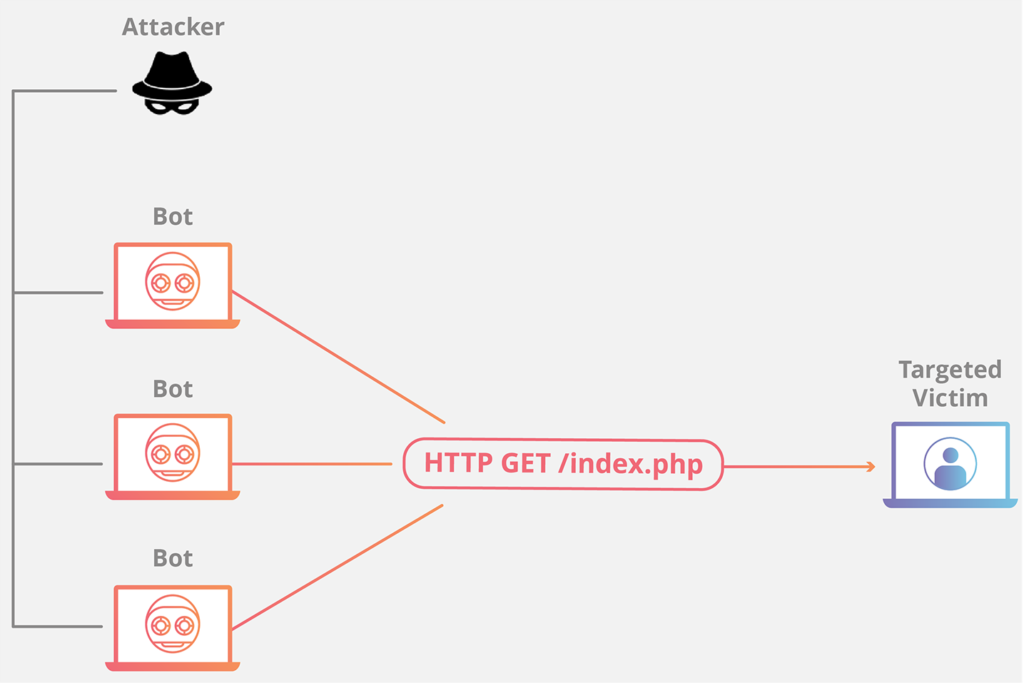

1. DDoS networks

Among the most notorious and dangerous bots are DDoS bots.

These programs are installed on the desktops or laptops of unwitting targets and are utilized to bring down a particular site or server.

2. Web scrapers

Web scrapers scrape websites for valuable information like email addresses or contact details. In rare cases, they can copy text and photos from sites and utilize them without authorization on some other website or social media account.

Many advanced bots produce harmful bot traffic that only goes to paid advertisers. These bots commit ad fraud instead of those that create undesirable website traffic. As the term suggests, this automated traffic generates hits on paid advertisements and significantly costs advertising agencies.

Publishers have several reasons to employ bot detection techniques to assist in filtering out illicit traffic, frequently camouflaged as regular traffic.

3, Vulnerability scanners

Numerous malicious bots scan zillions of sites for weaknesses and notify their developers of them. These harmful bots are made to communicate data to third parties, who can then sell the data and use it to infiltrate digital sites, in contrast to legitimate bots that alert the owner.

4. Spam bots

Spam bots are primarily made to leave comments on a webpage discussion thread that the bot’s author created.

While CAPTCHA (Completely Automated Public Turing Test to Tell Computers and Humans Apart) checks are intended to screen out the software-driven registration processes, they may not always be effective in stopping these bots from creating accounts.

How do bots impact website performance?

Organizations that don’t understand how to recognize handle, and scan bot traffic might ruin them.

Websites that offer goods and commodities with a low supply and depend on advertisements are highly vulnerable.

Bots that visit websites with ads on them and engage on different page elements might cause bogus page clicks.

It is called click fraud, and although it may raise ad revenue at first, once digital advertising platforms identify the fraud, the website and the operator will typically be removed from their system.

Stock hoarding bots may essentially shut down eCommerce websites with little stock by stuffing carts with tons of goods, blocking real customers from making purchases.

Your website may slow down when a bot frequently asks it for data. This implies that the website will load slowly for all users, which might seriously affect an internet business.

In extreme cases, excessive bot activity can bring your complete website down.

Web search crawling bots are increasingly becoming intelligent as we transition into a more technologically advanced future.

According to a survey, bots made up over 41% of all Internet traffic in 2021 — with harmful bots accounting for over 25% of all traffic.

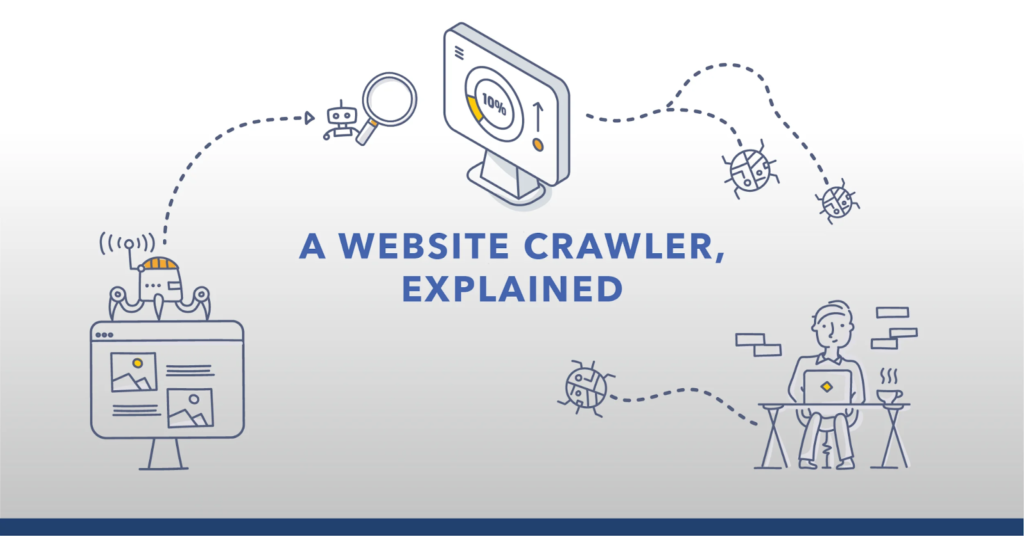

Web publishers or designers can spot bot activity by looking at the network queries made to their websites.

Identifying bots in web traffic can be further aided by using an embedded analytics platform such as Google Analytics.

How can Google Analytics detect and block bot traffic?

There are several straightforward methods of making your website block Google Analytics bot traffic. Here is the first option:

- Register for a Google Analytics profile first.

- Go to the Google Analytics admin console.

- Next, select the View option and then View Settings.

- To access the Bot Filtering option, scroll down.

- If the checkbox is not checked, hit Check.

- Then click Save.

The second option is to construct a filter to block any anomalous activity you’ve found.

You could make a new View where the Bot checkbox is disabled and filters that eliminate malicious traffic.

Add the criterion to the Master View after checking that it is functional.

Thirdly, you could utilize the Referral Exclusion List, which can be found in the Admin area below Tracking Info within the Property field.

You can eliminate sites from the Google Analytics metrics using this list. As a result, you can exclude any suspected URLs (Uniform Resource Locators) from your subsequent data by incorporating them into this checklist.

How to spot bot activity on websites?

1. Extraordinary high pageviews

Bots are typically to blame when a site has an abrupt, unanticipated, and unprecedented increase in page visits.

2. Extraordinary elevated bounce rates

The bounce rate is the proportion of visitors who arrive on your site but do nothing else while they’re here. An unexpected increase in bounce rates can signify that bots have been steered to a specific page.

3. Unexpectedly long or short session durations

The time visitors stay on a site is known as session duration. Human nature requires that this must continue to be constantly steady. However, an unexpected rise in session length is probably due to a bot surfing the website unusually slowly. On the other hand, if a session length is concise, a bot may be crawling web pages much more quickly than a person.

4. Conversions of junk

Growth in the percentage of fake conversions could be used to identify junk conversions — which manifest as a rise in the creation of profiles with illogical email accounts or the completion of web forms having a false name, mobile number, and address.5. Increase in visitors from a surprising location.

Another common sign of bot activity is a sharp increase in web traffic from a particular geographical region, especially where it is doubtful that native residents speak the language used to create the website.

How can you stop bot traffic on websites?

Once a business or organization has mastered the art of spotting bot traffic, it’s also crucial that they acquire the expertise and resources required to prevent bot traffic from harming their website.

The following resources can reduce threats:

1. Legal arbitrage

Paying for online traffic to guarantee high-yielding PPC (pay-per-click) or CPM (cost per mille) based initiatives is called traffic arbitrage.

Website owners can only minimize the chances of malicious bot traffic by buying traffic from reputable providers.

2. Robots.txt

This plugin can help prevent malicious bots from accessing a website.

3. Alerts with JavaScript

Site owners can add relevant JavaScript alerts to receive notifications anytime a bot enters the website.

4. Lists of DDoS

Publishers can reduce the quantity of DDoS fraud by compiling an inventory of objectionable IP (Internet Protocol) addresses and blocking such visit attempts on their site.

5. Tests for type-challenge responses

Using CAPTCHA on a sign-up or download form is among the easiest and most popular ways to identify bot traffic. It’s beneficial for preventing spam bots and downloads.

6. Log files

Analyzing server error logs can assist web administrators with strong metrics and data analytics knowledge in identifying and resolving bot-related website faults.

Conclusion

Bot traffic shouldn’t be disregarded because it may be costly for any business with a web presence.

Although there are multiple ways to limit malicious bot traffic, purchasing a dedicated bot control solution is the most effective.

Author’s Bio: Atreyee Chowdhury works full-time as a Content Manager with a Fortune 1 retail giant. She is passionate about writing and helped many small and medium-scale businesses achieve their content marketing goals with her carefully crafted and compelling content. You can follow her on LinkedIn.